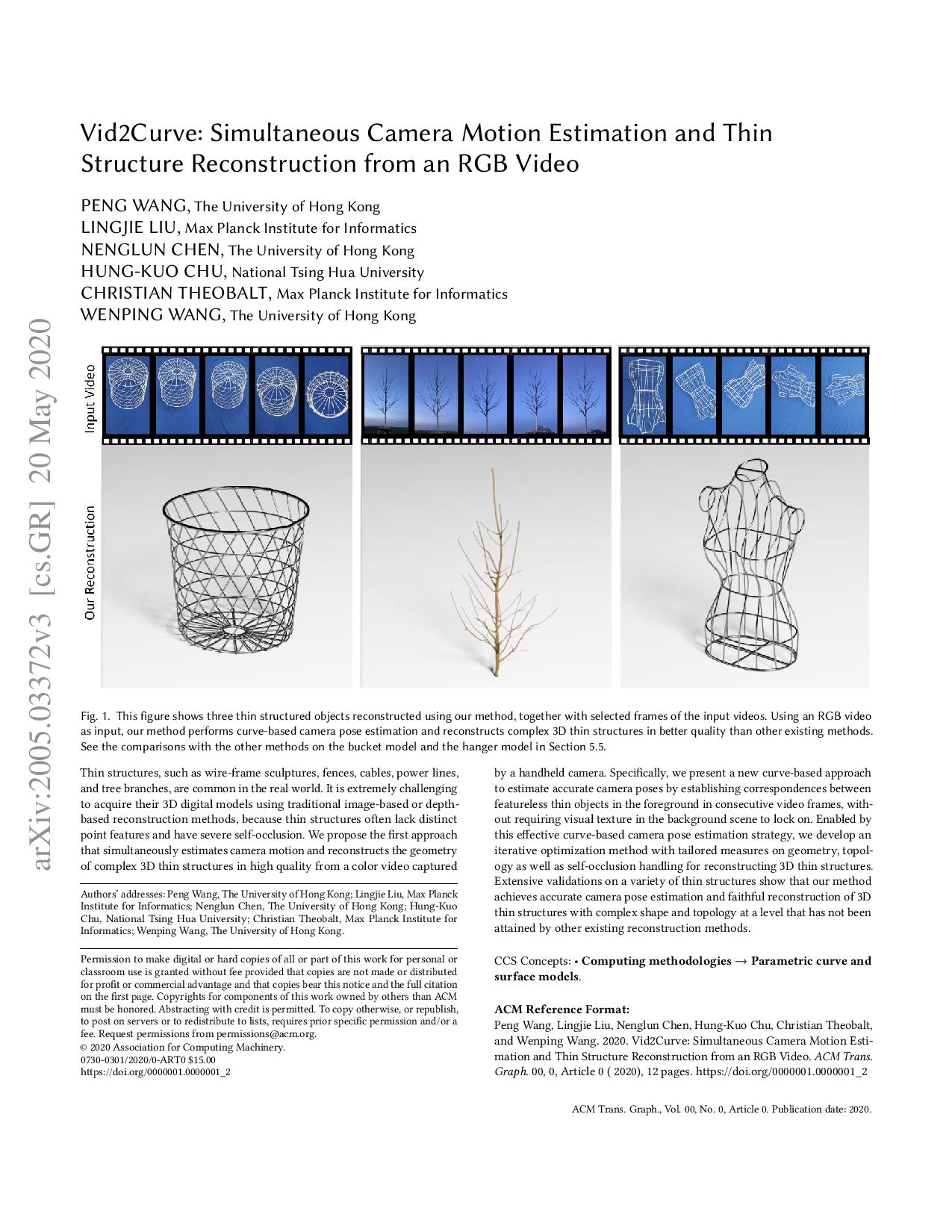

Vid2Curve: Simultaneous Camera Motion Estimation and Thin Structure Reconstruction from an RGB Video

the University of Hong Kong

Max-Planck-Institute for Informatics

the University of Hong Kong

National Tsinghua University

Max-Planck-Institute for Informatics

the University of Hong Kong